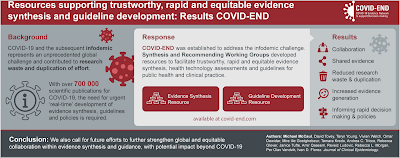

The COVID-19 pandemic was not only associated with a rapid worldwide spread of a virus, but also of large amounts of information across the globe - not all of which was trustworthy or credible. Experts call this an "infodemic." In order to improve the synthesis and dissemination of trustworthy information in a manner that could keep up with the fast pace and ever-changing landscape of knowledge on COVID-19, the COVID-19 Evidence Network to support Decision-making (COVID-END) was established.

In a paper published in this month's issue of Journal of Clinical Epidemiology, McCaul and colleagues describe how the COVID-19 pandemic ushered in an urgent need to rapidly understand the etiology and management strategies for the disease, and to disseminate this information far and wide. However, a lack of collaboration resulting in duplication of work across institutions and countries hampered these efforts. COVID-END, comprising two working groups dedicated to overseeing the coordination and dissemination of trustworthy evidence syntheses and guidelines, was a result of these unprecedented needs. The effort also included an Equity Task Group that evaluated the impact of evidence synthesis and recommendations on matters of health and socioeconomic disparities arising from or exacerbated by the pandemic.

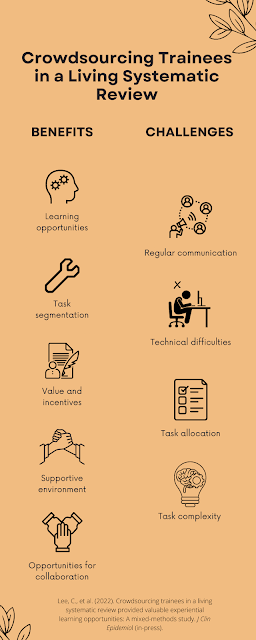

The goal of the project, in the authors' words, was to support the "evidence supply side" by promoting already available resources and work led by institutions across the globe, both for those involved in evidence synthesis or the formulation of recommendations based on the evidence. The avoidance of effort duplication was highlighted by, for instance, urging guideline developers to first search for existing high-quality and up-to-date guidelines before beginning work on new recommendations. The development and use of living systematic reviews, which are continually updated as new evidence becomes available, is further highlighted as a way to improve the timeliness of evidence syntheses while reducing efforts put into new projects.

McCaul, M., Tovey, D., Young, T., et al. (2022). Resources supporting trustworthy, rapid and equitable evidence synthesis and guideline development: Results from the COVID-19 Evidence Network to support Decision-making (COVID-END). J Clin Epidemiol 151: 88-95. Manuscript available at publisher's website here.

.png)

.png)