Living systematic reviews (LSRs) continue to be a topic of interest among systematic review and guideline developers, as evidenced by our history posts on the topic here, here, and here. While automation and machine learning have begun to help facilitate what is a generally time- and resource-intensive process to evidence syntheses perpetually up-to-date, some aspects of LSR development still require the human touch. Now, a recently published mixed-methods study discusses the successes and challenges of utilizing a crowdsourcing approach to keep the LSR wheels turning.

The article describes the process of involving trainees in the development of a living systematic review and network meta-analysis (NMA) on drug therapy for rheumatoid arthritis. In their report, the authors posit that evidence-based medicine is a key pillar of learning for trainees, but that they may learn better through an experiential rather than a purely didactic approach; providing the opportunity to participate in a real-life systematic review may provide this experiential learning.

In short, the team first applied machine learning to sort through an initial database to filter out randomized controlled trials, which was then further assessed by a crowdsourcing platform, Cochrane Crowd. Next, trainees ranging from undergraduate students to practicing rheumatologists and researchers recruited through Canadian and Australian rheumatology mailing lists further assessed articles for eligibility and extracted data from included articles.

Training included a mix of online webinars, one-on-one trainings, and handbook provisions. Conflicting results were further assessed by an expert member of the team. The authors then elicited both quantitative and qualitative feedback about the trainees' experiences of taking part in the project through a combination of electronic survey and one-on-one interviews.

Overall, the 21 trainees surveyed rated their training as adequate and experience generally positive. Respondents specifically listed better understanding of PICO criteria, familiarity with outcome measures used in rheumatology, and the assessment of studies' risk of bias as the greatest learning benefits obtained.

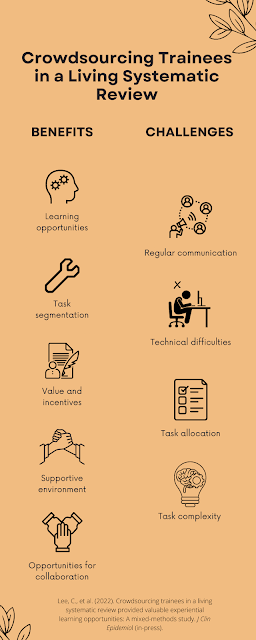

Of the 16 who participated in follow-up interviews, the majority (94%) described a practical and enjoyable experience. Of particular positive regard was the use of task segmentation throughout the project, during which specific tasks (i.e., eligibility assessment versus data extraction) could be "batch-processed," allowing trainees to match the specific time and focus demands to the selected task at hand. Trainees also communicated an appreciation for the international collaboration involved in the review as well as the feeling of meaningfully contributing to the project.

Notable challenges included issues related to the clarity of communication regarding deadlines and expectations, as well as technical glitches experienced through the platforms used for screening and extraction. Though task segmentation was seen as a benefit, it also included drawbacks: namely, the risk of more repetitive tasks such as eligibility assessment becoming tedious while others that require more focus (i.e., data extraction) may be difficult to integrate into an already-busy daily schedule. To address these issues, the authors suggest improving communications to include regular, frequent updates and deadline reminders, working through technological glitches, and carefully matching tasks to the specific skillsets and availabilities of each trainee.

Lee, C., Thomas, M., Ejaredar, M., Kassam, A., Whittle, S.L., Buchbinder, R., ... & Hazlewood, G.S. (2022). Crowdsourcing trainees in a living systematic review provided valuable experiential learning opportunities: A mixed-methods study. J Clin Epidemiol (in-press). Manuscript available at the publisher's website here.