Although it is currently the only tool recommended by the Cochrane Handbook for assessing risk of bias in non-randomized studies of interventions, the Risk Of Bias In Non-randomized Studies of Interventions (ROBINS-I) tool can be complex and difficult to use effectively for reviewers lacking specific training or expertise in its application. Previous posts have summarized research examining the reliability of ROBINS-I, suggesting that it can improve with training of reviewers. Now, a study from Igelström and colleagues finds that the tool is commonly modified or used incorrectly, potentially affecting the certainty of evidence or strength of recommendations resulting from synthesis of these studies.

The authors reviewed 124 systematic reviews published across two months in 2020, using A MeaSurement Tool to Assess systematic Reviews (AMSTAR) to operationalize the overall quality of the reviews. The authors extracted data related to the use of ROBINS-I to assess risk of bias across studies and/or outcomes as well as the number of studies included, whether meta-analysis was performed, and whether any funding sources were declared. They then assessed whether the application of ROBIN-I was predicted by the review's overall methodological quality (as measured by AMSTAR), the performance of risk of bias assessment in duplicate, the presence of industry funding, or the inclusion of randomized controlled trials in the review.

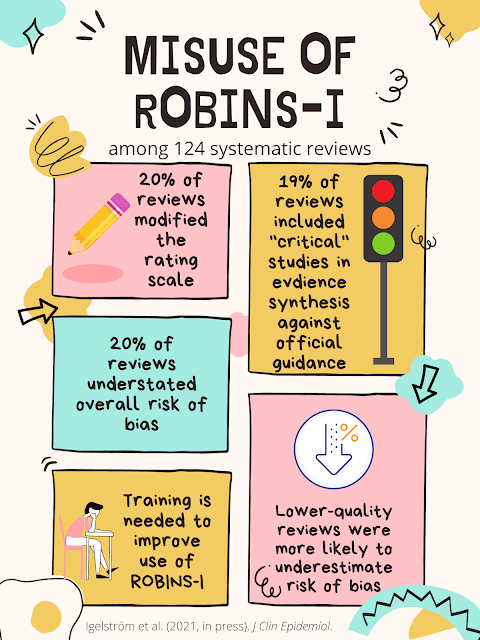

Overall methodological quality across the reviews was generally low to very low, with only 17% scoring as moderate quality and 6% scoring as high quality. Only six (5%) of the reviews reported explicit justifications for risk of bias judgments both across and within domains. Modification of ROBINS-I was common, with 20% of reviews modifying the rating scale, and six either not reporting across all seven domains or adding an eight domain. In 19% of reviews, studies rated as having a "critical" risk of bias were included in the narrative or quantitative synthesis, against guidance for the use of the tool.

Reviews that were of higher quality as assessed by AMSTAR tended to contain fewer "low" or "moderate" risk of bias ratings and more judgments of "critical" risk of bias. Thus, the authors argue, incorrect or modified use of ROBINS-I may risk underestimating the potential risk of bias among included studies, potentially affecting the resulting conclusions or recommendations. Associations between the use of ROBINS-I and the other potential predictors, however, were less conclusive.

Igelström, E., Campbell, M., Craig, P., and Katikireddi, S.V. (2021). Cochrane's risk-of-bias tool for non-randomized studies (ROBINS-I) is frequently misapplied: A methodological systematic review. J Clin Epidmiol, in-press.

Manuscript available from publisher's website here.